参考 opencv aruco 实现对单个QR码的检测与定位

aruco是opencv_contrib的一个模块,实现了对AR码的检测/姿态估计

使用aruco需要安装opencv_contrib,本文将aruco中姿态估计用到的函数提取出来,结合zbar对QR码识别定位.

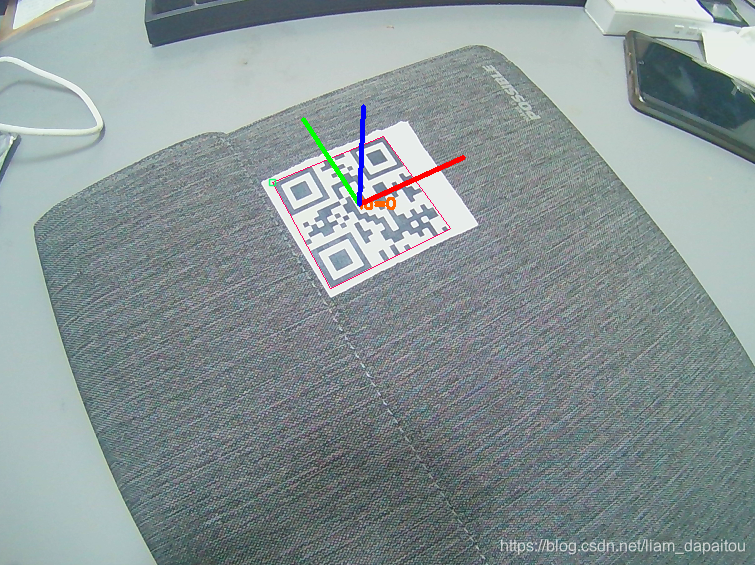

效果如下图

安装zbar

# ubuntu apt install

sudo apt install libzbar-dev

将aruco中姿态估计用到的函数提取出来

estimate_marker_pose.h

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

//绘制坐标轴

void myDrawFrameAxes(InputOutputArray image, InputArray cameraMatrix, InputArray distCoeffs,

InputArray rvec, InputArray tvec, float length, int thickness);

//绘制边框以及id

void myDrawDetectedMarkers(InputOutputArray _image, InputArrayOfArrays _corners,

InputArray _ids, Scalar borderColor);

//生成实际四个定点的坐标

void myGetSingleMarkerObjectPoints(float markerLength, OutputArray _objPoints);

//解PnP获得旋转向量和平移向量

void myEstimatePoseSingleMarkers(InputArrayOfArrays _corners, float markerLength,

InputArray _cameraMatrix, InputArray _distCoeffs,

OutputArray _rvecs, OutputArray _tvecs);

estimate_marker_pose.cpp

#include <estimate_marker_pose.h>

using namespace std;

using namespace cv;

void myDrawFrameAxes(InputOutputArray image, InputArray cameraMatrix, InputArray distCoeffs,

InputArray rvec, InputArray tvec, float length, int thickness)

{

CV_Assert(image.getMat().total() > 0);

CV_Assert(length > 0);

// project axes points

vector<Point3f> axesPoints;

axesPoints.push_back(Point3f(0, 0, 0));

axesPoints.push_back(Point3f(length, 0, 0));

axesPoints.push_back(Point3f(0, length, 0));

axesPoints.push_back(Point3f(0, 0, length));

vector<Point2f> imagePoints;

projectPoints(axesPoints, rvec, tvec, cameraMatrix, distCoeffs, imagePoints);

// draw axes lines

line(image, imagePoints[0], imagePoints[1], Scalar(0, 0, 255), thickness);

line(image, imagePoints[0], imagePoints[2], Scalar(0, 255, 0), thickness);

line(image, imagePoints[0], imagePoints[3], Scalar(255, 0, 0), thickness);

}

void myDrawDetectedMarkers(InputOutputArray _image, InputArrayOfArrays _corners,

InputArray _ids, Scalar borderColor)

{

CV_Assert(_image.getMat().total() != 0 &&

(_image.getMat().channels() == 1 || _image.getMat().channels() == 3));

CV_Assert((_corners.total() == _ids.total()) || _ids.total() == 0);

// calculate colors

Scalar textColor, cornerColor;

textColor = cornerColor = borderColor;

swap(textColor.val[0], textColor.val[1]); // text color just sawp G and R

swap(cornerColor.val[1], cornerColor.val[2]); // corner color just sawp G and B

int nMarkers = (int)_corners.total();

for(int i = 0; i < nMarkers; i++) {

Mat currentMarker = _corners.getMat(i);

CV_Assert(currentMarker.total() == 4 && currentMarker.type() == CV_32FC2);

// draw marker sides

for(int j = 0; j < 4; j++) {

Point2f p0, p1;

p0 = currentMarker.ptr< Point2f >(0)[j];

p1 = currentMarker.ptr< Point2f >(0)[(j + 1) % 4];

line(_image, p0, p1, borderColor, 1);

}

// draw first corner mark

rectangle(_image, currentMarker.ptr< Point2f >(0)[0] - Point2f(3, 3),

currentMarker.ptr< Point2f >(0)[0] + Point2f(3, 3), cornerColor, 1, LINE_AA);

// draw ID

if(_ids.total() != 0) {

Point2f cent(0, 0);

for(int p = 0; p < 4; p++)

cent += currentMarker.ptr< Point2f >(0)[p];

cent = cent / 4.;

stringstream s;

s << "id=" << _ids.getMat().ptr< int >(0)[i];

putText(_image, s.str(), cent, FONT_HERSHEY_SIMPLEX, 0.5, textColor, 2);

}

}

}

void myGetSingleMarkerObjectPoints(float markerLength, OutputArray _objPoints) {

_objPoints.create(4, 1, CV_32FC3);

Mat objPoints = _objPoints.getMat();

// set coordinate system in the middle of the marker, with Z pointing out

objPoints.ptr< Vec3f >(0)[0] = Vec3f(-markerLength / 2.f, markerLength / 2.f, 0);

objPoints.ptr< Vec3f >(0)[1] = Vec3f(markerLength / 2.f, markerLength / 2.f, 0);

objPoints.ptr< Vec3f >(0)[2] = Vec3f(markerLength / 2.f, -markerLength / 2.f, 0);

objPoints.ptr< Vec3f >(0)[3] = Vec3f(-markerLength / 2.f, -markerLength / 2.f, 0);

}

void myEstimatePoseSingleMarkers(InputArrayOfArrays _corners, float markerLength,

InputArray _cameraMatrix, InputArray _distCoeffs,

OutputArray _rvecs, OutputArray _tvecs) {

Mat markerObjPoints;

myGetSingleMarkerObjectPoints(markerLength, markerObjPoints);

int nMarkers = (int)_corners.total();

_rvecs.create(nMarkers, 1, CV_64FC3);

_tvecs.create(nMarkers, 1, CV_64FC3);

Mat rvecs = _rvecs.getMat(), tvecs = _tvecs.getMat();

for each marker, calculate its pose

parallel_for_(Range(0, nMarkers), [&](const Range& range) {

const int begin = range.start;

const int end = range.end;

for (int i = begin; i < end; i++) {

solvePnP(markerObjPoints, _corners.getMat(i), _cameraMatrix, _distCoeffs, rvecs.at<Vec3d>(i),

tvecs.at<Vec3d>(i));

}

});

}

main.cpp

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp>

#include <iostream>

#include <zbar.h>

#include <estimate_marker_pose.h>

#include <opencv2/imgproc/types_c.h>

using namespace std;

using namespace zbar; //添加zbar名称空间

using namespace cv;

int main(int argc,char*argv[])

{

cout<< "CV_VERSION: " << CV_VERSION << endl;

//相机标定的参数-------

double fx = 412.433229;

double cx = 318.310004;

double fy = 414.182775;

double cy = 236.769192;

double k1 = -0.320394;

double k2 = 0.108028;

double p1 = -0.000993;

double p2 = 0.001297;

double k3 = 0;

Mat cameraMatrix = (cv::Mat_<float>(3, 3) <<

fx, 0.0, cx,

0.0, fy, cy,

0.0, 0.0, 1.0);

Mat distCoeffs = (cv::Mat_<float>(5, 1) << k1, k2, p1, p2, k3);

//相机标定的参数-------

//二维码的边长

double marker_size = 5.0; //单位 cm

//zbar::ImageScanner

ImageScanner scanner;

scanner.set_config(ZBAR_NONE, ZBAR_CFG_ENABLE, 1);

cv::VideoCapture inputVideo;

inputVideo.open(0);

inputVideo.set(cv::CAP_PROP_FRAME_WIDTH,1280);

inputVideo.set(cv::CAP_PROP_FRAME_HEIGHT,720);

while (inputVideo.grab())

{

cv::Mat image;

inputVideo.retrieve(image);//抓取视频中的一张照片

flip(image,image,1);// 水平方向镜像

Mat imageGray;

cvtColor(image,imageGray,CV_RGB2GRAY);

int width = imageGray.cols;

int height = imageGray.rows;

uchar *raw = (uchar *)imageGray.data;

Image imageZbar(width, height, "Y800", raw, width * height);

scanner.scan(imageZbar); //扫描条码

Image::SymbolIterator symbol = imageZbar.symbol_begin();

if(imageZbar.symbol_begin()==imageZbar.symbol_end())

{

cout<<"can't detect QR code!"<<endl;

}

std::vector<int> ids;

std::vector<std::vector<cv::Point2f> > corners;

std::vector<cv::Vec3d> rvecs, tvecs;

for(int i = 0;symbol != imageZbar.symbol_end();++symbol)

{

cout<<"type:"<<endl<<symbol->get_type_name()<<endl<<endl;

cout<<"data:"<<endl<<symbol->get_data()<<endl<<endl;

cout<<"data_length:"<<endl<<symbol->get_data_length()<<endl<<endl;

cout<<"location_size:"<<endl<<symbol->get_location_size()<<endl<<endl;

std::vector<cv::Point2f> corner;

corner.push_back(cv::Point2f(symbol->get_location_x(0),symbol->get_location_y(0)));

corner.push_back(cv::Point2f(symbol->get_location_x(3),symbol->get_location_y(3)));

corner.push_back(cv::Point2f(symbol->get_location_x(2),symbol->get_location_y(2)));

corner.push_back(cv::Point2f(symbol->get_location_x(1),symbol->get_location_y(1)));

corners.push_back(corner);

ids.push_back(i);

i++;

}

//求解旋转向量rvecs和平移向量tvecs

myEstimatePoseSingleMarkers(corners, marker_size, cameraMatrix, distCoeffs, rvecs, tvecs);

for (int i = 0; i < ids.size(); i ++)

{

// cv::aruco::drawDetectedMarkers(image, corners, ids);//绘制检测到的靶标的框

myDrawDetectedMarkers(image, corners, ids,Scalar(100, 0, 255));//绘制检测到的靶标的框

// cv::aruco::drawAxis(image, cameraMatrix, distCoeffs, rvecs[i], tvecs[i], 5);

myDrawFrameAxes(image, cameraMatrix, distCoeffs, rvecs[i], tvecs[i], 5, 3);

// drawFrameAxes(image, cameraMatrix, distCoeffs, rvecs[i], tvecs[i], 5, 3);//opencv 3.3.1 没有 4.2.1有

cout<<" T :"<<tvecs[i]<<endl;

cout<<" R :"<<rvecs[i]<<endl;

}

imshow("Source Image",image);

waitKey(1);

}

return 0;

}

CMakeLists.txt

# estimate_marker_pose

add_library( estimate_marker_pose src/estimate_marker_pose.cpp)

target_link_libraries(estimate_marker_pose ${OpenCV_LIBS} )

# main

add_executable(main src/main.cpp)

target_link_libraries(main zbar estimate_marker_pose ${OpenCV_LIBS} )

打印

type:

QR-Code

data:

1

data_length:

1

location_size:

4

T :[2.16432, -0.594964, 15.9725]

R :[2.47789, -0.487188, -0.156933]

版权声明:本文为liam_dapaitou原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。