一. 基于logstash filter功能将nginx默认的访问日志及error log转换为json格式并写入elasticsearch

- logstash处理过程:input ->filter ->output

- 可以参考logstash内置的变量定义写脚本,/usr/share/logstash/vendor/bundle/jruby/2.6.0/gems/logstash-patterns-core-4.3.4/patterns/legacy/grok-patterns

- logstash filter介绍:

aggregate:同一个事件的多行日志聚合功能

bytes: 讲存储单位MB、GB、TB等转换为字节

date:从事件中解析日期,然后作为logsatsh的时间戳

geoip:对IP进行地理信息识别并添加到事件中

grok:基于正则表达式对事件进行匹配并以json格式输出,grok经常用于对系统errlog、mysql及zookeeper等中间件服务、网络设备日志等

进行重新结构化处理(将非json格式日志转换为json格式),然后将转换后的日志重新输出到elasticsearch进行存储、在通过kibana进行绘图展示

mutate: 对事件中的字段进行重命名、删除、修改等操作 - 安装nginx服务,并启动服务

root@web1:/etc/logstash/conf.d# systemctl status nginx

● nginx.service – A high performance web server and a reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2022-12-02 13:12:48 UTC; 3h 9min ago

Docs: man:nginx(8)

Main PID: 952 (nginx)

…

5.编写logstash.conf脚本,过滤nginx的access,error日志

input {

file {

path => "/apps/nginx/logs/access.log"

type => "nginx-accesslog"

stat_interval => "1"

start_position => "beginning"

}

file {

path => "/apps/nginx/logs/error.log"

type => "nginx-errorlog"

stat_interval => "1"

start_position => "beginning"

}

}

filter {

if [type] == "nginx-accesslog" {

grok {

match => { "message" => ["%{IPORHOST:clientip} - %{DATA:username} \[%{HTTPDATE:request-time}\] \"%{WORD:request-method} %{DATA:request-uri} HTTP/%{NUMBER:http_version}\" %{NUMBER:response_code} %{NUMBER:body_sent_bytes} \"%{DATA:referrer}\" \"%{DATA:useragent}\""] }

remove_field => "message"

add_field => { "project" => "magedu"}

}

mutate {

convert => [ "[response_code]", "integer"]

}

}

if [type] == "nginx-errorlog" {

grok {

match => { "message" => ["(?<timestamp>%{YEAR}[./]%{MONTHNUM}[./]%{MONTHDAY} %{TIME}) \[%{LOGLEVEL:loglevel}\] %{POSINT:pid}#%{NUMBER:threadid}\: \*%{NUMBER:connectionid} %{GREEDYDATA:message}, client: %{IPV4:clientip}, server: %{GREEDYDATA:server}, request: \"(?:%{WORD:request-method} %{NOTSPACE:request-uri}(?: HTTP/%{NUMBER:httpversion}))\", host: %{GREEDYDATA:domainname}"]}

remove_field => "message"

}

}

}

output {

if [type] == "nginx-accesslog" {

elasticsearch {

hosts => ["172.31.2.101:9200"]

index => "magedu-nginx-accesslog-%{+yyyy.MM.dd}"

user => "magedu"

password => "123456"

}}

if [type] == "nginx-errorlog" {

elasticsearch {

hosts => ["172.31.2.101:9200"]

index => "magedu-nginx-errorlog-%{+yyyy.MM.dd}"

user => "magedu"

password => "123456"

}}

}

6.重启logstash服务

root@web1:/etc/logstash/conf.d# systemctl restart logstash.service

● logstash.service – logstash

Loaded: loaded (/lib/systemd/system/logstash.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2022-12-02 16:10:25 UTC; 13min ago

Main PID: 17831 (java)

Tasks: 44 (limit: 2236)

Memory: 793.3M

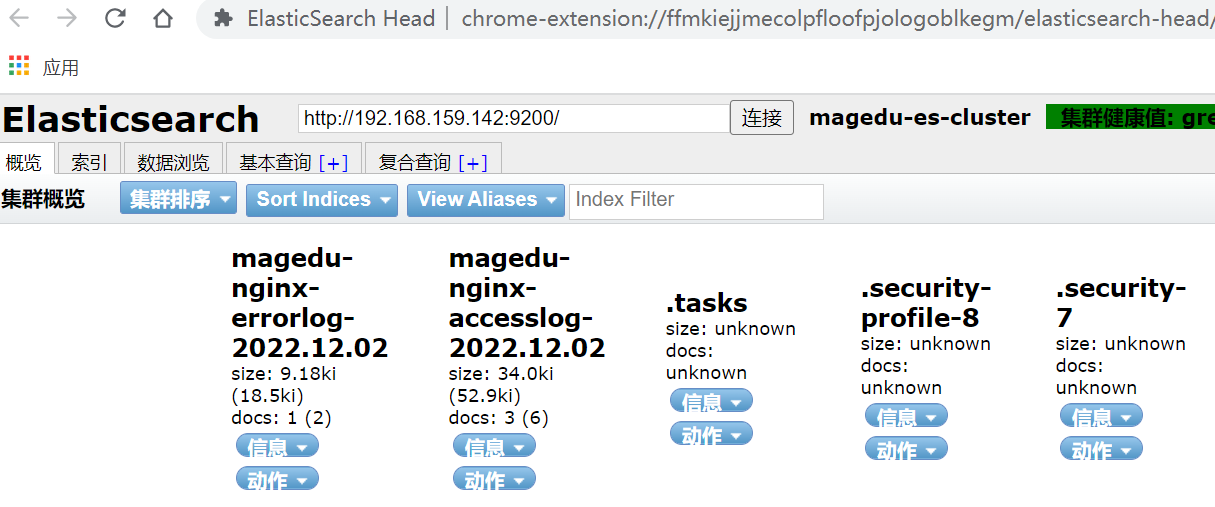

7.登录elasticsearch,查看nginx-accesslog nginx-errorlog索引,能正常查看到

8.登录kibana,创建nginx-accesslog和nginx-errorlog数据视图,正常查看日志

二. 基于logstash收集json格式的nginx访问日志

1.修改nginx的配置文件,以json格式存放到/var/log/nginx/access.log文件中

root@web1:/etc/nginx# cat nginx.conf

log_format access_json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"uri":"$uri",'

'"domain":"$host",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"tcp_xff":"$proxy_protocol_addr",'

'"http_user_agent":"$http_user_agent",'

'"status":"$status"}';

access_log /var/log/nginx/access.log access_json;

登录elasticsearch查看是否创建索引,已正常创建索引。

登录kibana,查看access-log视图,日志以json格式展示,正常。

三. 基于logstash收集java日志并实现多行合并

在ES1上面安装logstash

修改logstash服务 /lib/systemd/system/logstash.service

systemctl daemon-reload

编写es-log-to-es.conf logstash的conf文件,使用multiline实现日志多行合并

root@es1:/data/eslogs# cd /etc/logstash/conf.d/

root@es1:/etc/logstash/conf.d# ls

es-log-to-es.conf

root@es1:/etc/logstash/conf.d# cat es-log-to-es.conf

input {

file {

path => "/data/eslogs/magedu-es-cluster.log"

type => "eslog"

stat_interval => "1"

start_position => "beginning"

codec => multiline {

#pattern => "^\["

pattern => "^\[[0-9]{4}\-[0-9]{2}\-[0-9]{2}"

negate => "true"

what => "previous"

}

}

}

output {

if [type] == "eslog" {

elasticsearch {

hosts => ["192.168.159.142:9200"]

index => "magedu-eslog-%{+YYYY.ww}"

user => "magedu"

password => "123456"

}}

}

启动logstash服务 systemctl start logstash.service

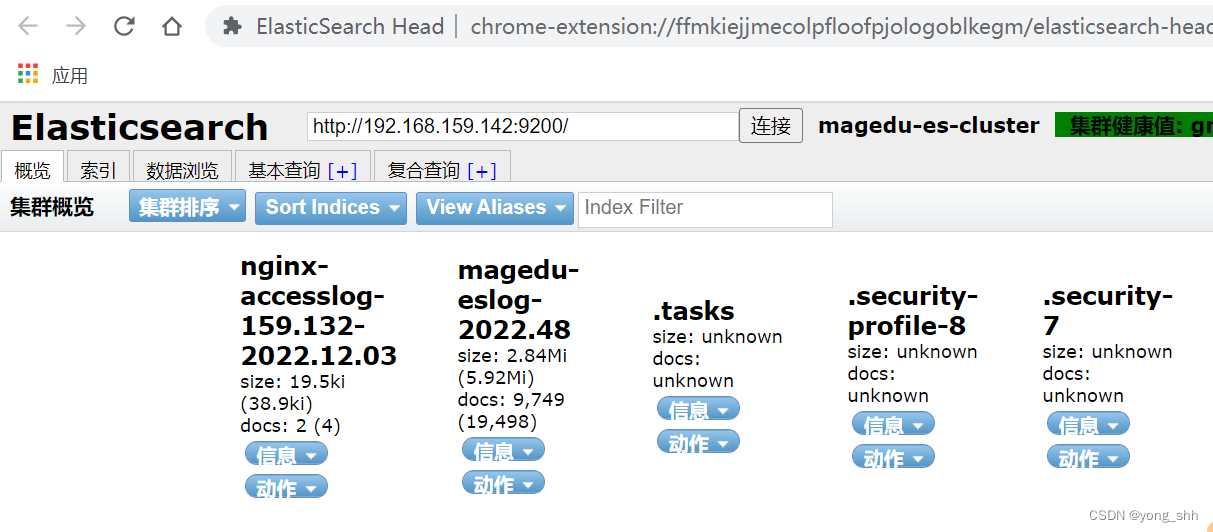

登录elasticsearch查看是否创建索引magedu-eslog,已正常创建索引。

登录kibana,创建magedu-eslog视图,查看日志,正常。

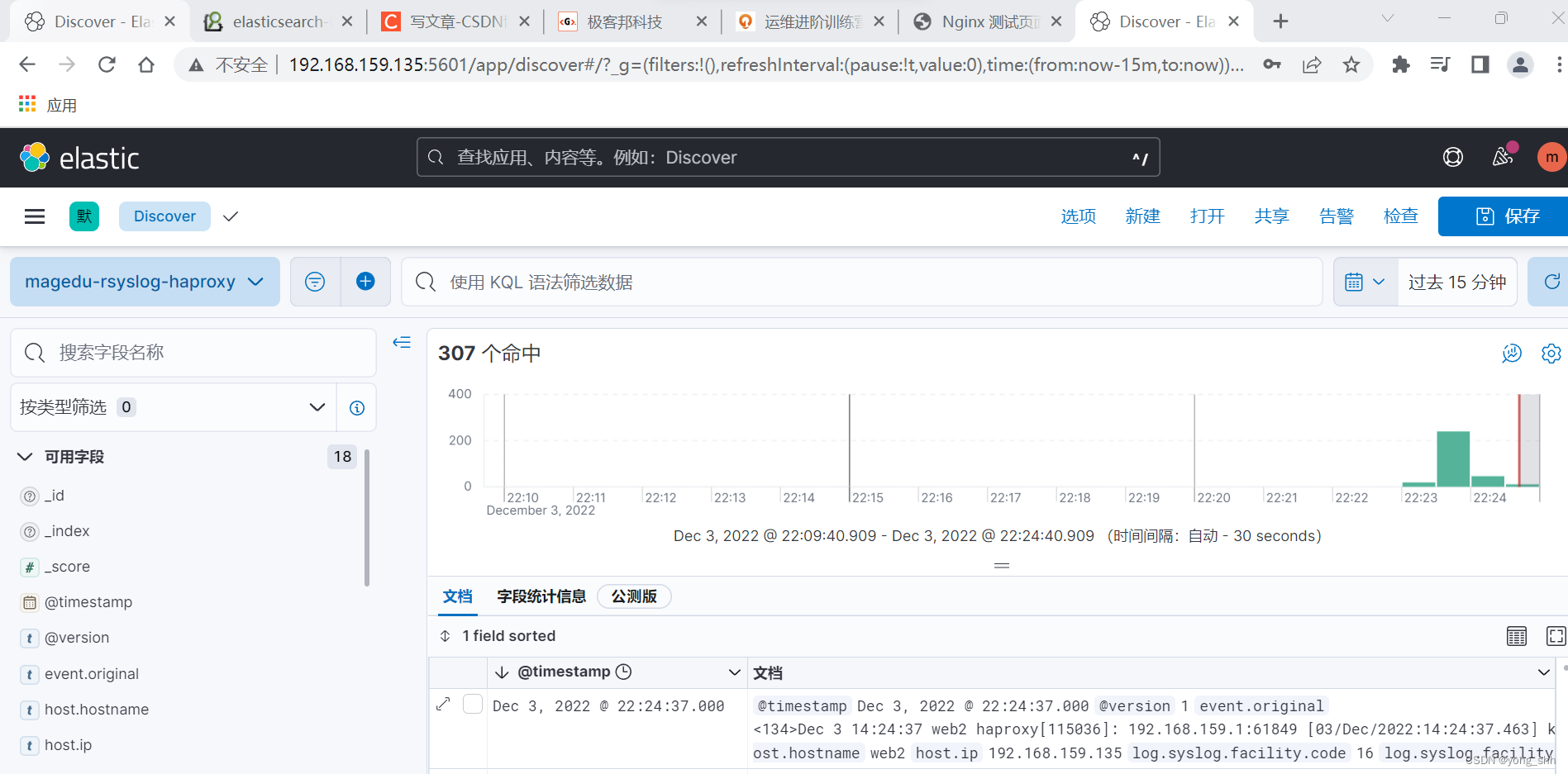

四. 基于logstash收集syslog类型日志(以haproxy替代网络设备)

流程: 部署haproxy(代替网络设备) -> rsyslog -> logstash -> es -> kibana

在web2上部署haproxy

root@web2:~# apt update && apt install -y haproxy

修改haproxy配置文件 vim /etc/haproxy/haproxy.cfg,添加监听端口

listen kibana

bind 0.0.0.0:5601 #端口监听

log global #日志

server 192.168.159.141 192.168.159.141:5601 check inter 2s fall 3 rise 3 #监听的服务地址

listen elasticsearch-9200

bind 0.0.0.0:9200

log global

server 192.168.159.142 192.168.159.142:9200 check inter 2s fall 3 rise 3

server 192.168.159.143 192.168.159.143:9200 check inter 2s fall 3 rise 3

修改rsyslog配置文件,514端口为网络设备指定的端口

vim /etc/rsyslog.d/49-haproxy.conf

:programname, startswith, “haproxy” {

#/var/log/haproxy.log

@@192.168.159.135:514 #Send HAProxy messages to a dedicated logfile

stop

}

root@web2:~# systemctl restart rsyslog.service

配置logstash,使用syslog模块,收集514端口服务日志信息

root@web2:~# cat /etc/logstash/conf.d/network-to-es.conf

input{

syslog {

type => “rsyslog-haproxy”

port => “514” #监听一个本地的端口

}}

output{

if [type] == “rsyslog-haproxy” {

elasticsearch {

hosts => [“192.168.159.142:9200”]

index => “magedu-rsyslog-haproxy-%{+YYYY.ww}”

user => “magedu”

password => “123456”

}}

}

五. logstash收集日志并写入Redis、再通过其它logstash消费至elasticsearch并保持json格式日志的解析

安装redis root@web1:~# apt install redis -y

配置redis

root@web1:~# vim /etc/redis/redis.conf

save “”

bind 0.0.0.0

requirepass 123456

重启redis服务

root@web1:~# systemctl restart redis-server.service

进入到redis服务

root@web1:~# telnet 192.168.159.132 6379

Trying 192.168.159.132…

Connected to 192.168.159.132.

Escape character is ‘^]’.

auth 123456

web1以前已经安装了logstash服务,编写logstash文件 magedu-log-to-redis.conf

root@web1:/etc/logstash/conf.d# cat magedu-log-to-redis.conf

input {

file {

path => "/var/log/nginx/access.log"

type => "magedu-nginx-accesslog"

start_position => "beginning"

stat_interval => "1"

codec => "json" #对json格式日志进行json解析

}

file {

path => "/apps/nginx/logs/error.log"

type => "magedu-nginx-errorlog"

start_position => "beginning"

stat_interval => "1"

}

}

filter {

if [type] == "magedu-nginx-errorlog" {

grok {

match => { "message" => ["(?<timestamp>%{YEAR}[./]%{MONTHNUM}[./]%{MONTHDAY} %{TIME}) \[%{LOGLEVEL:loglevel}\] %{POSINT:pid}#%{NUMBER:threadid}\: \*%{NUMBER:connectionid} %{GREEDYDATA:message}, client: %{IPV4:clientip}, server: %{GREEDYDATA:server}, request: \"(?:%{WORD:request-method} %{NOTSPACE:request-uri}(?: HTTP/%{NUMBER:httpversion}))\", host: %{GREEDYDATA:domainname}"]}

remove_field => "message" #删除源日志

}

}

}

output {

if [type] == "magedu-nginx-accesslog" {

redis {

data_type => "list"

key => "magedu-nginx-accesslog"

host => "192.168.159.132"

port => "6379"

db => "0"

password => "123456"

}

}

if [type] == "magedu-nginx-errorlog" {

redis {

data_type => "list"

key => "magedu-nginx-errorlog"

host => "192.168.159.132"

port => "6379"

db => "0"

password => "123456"

}

}

}

在web2上面 编写redis->logstash脚本,使web1上redis日志流转到web2的logstash里面。

root@web2:/etc/logstash/conf.d# cat magedu-log-redis-logstash.conf

input {

redis {

data_type => "list"

key => "magedu-nginx-accesslog"

host => "192.168.159.132"

port => "6379"

db => "0"

password => "123456"

}

redis {

data_type => "list"

key => "magedu-nginx-errorlog"

host => "192.168.159.132"

port => "6379"

db => "0"

password => "123456"

}

}

output {

if [type] == "magedu-nginx-accesslog" {

elasticsearch {

hosts => ["192.168.159.141:9200"]

index => "redis-magedu-nginx-accesslog-%{+yyyy.MM.dd}"

user => "magedu"

password => "123456"

}}

if [type] == "magedu-nginx-errorlog" {

elasticsearch {

hosts => ["192.168.159.141:9200"]

index => "redis-magedu-nginx-errorlog-%{+yyyy.MM.dd}"

user => "magedu"

password => "123456"

}}

}

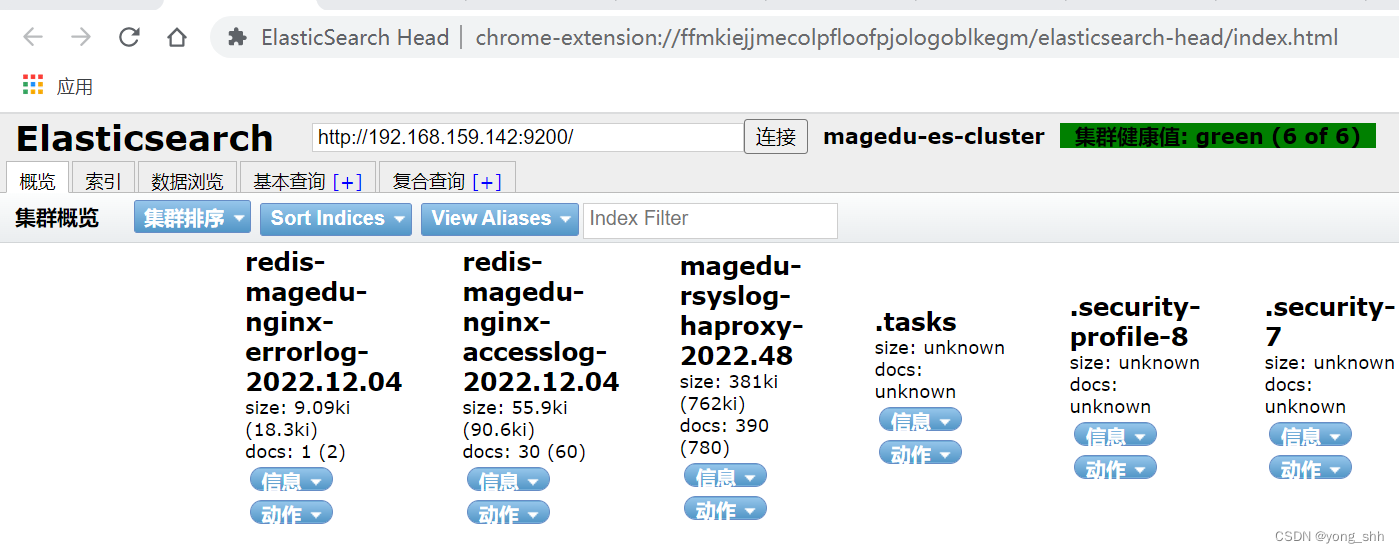

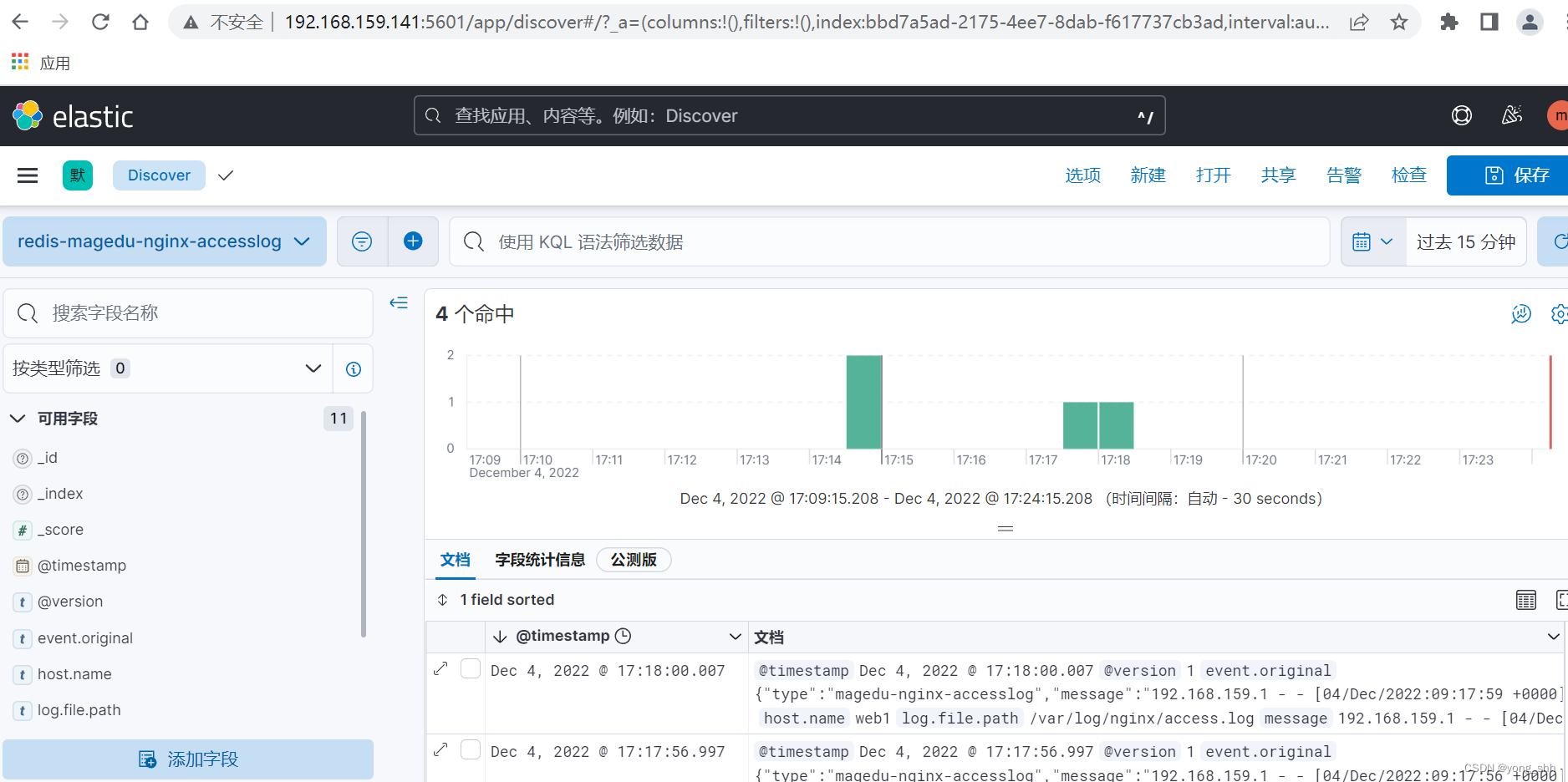

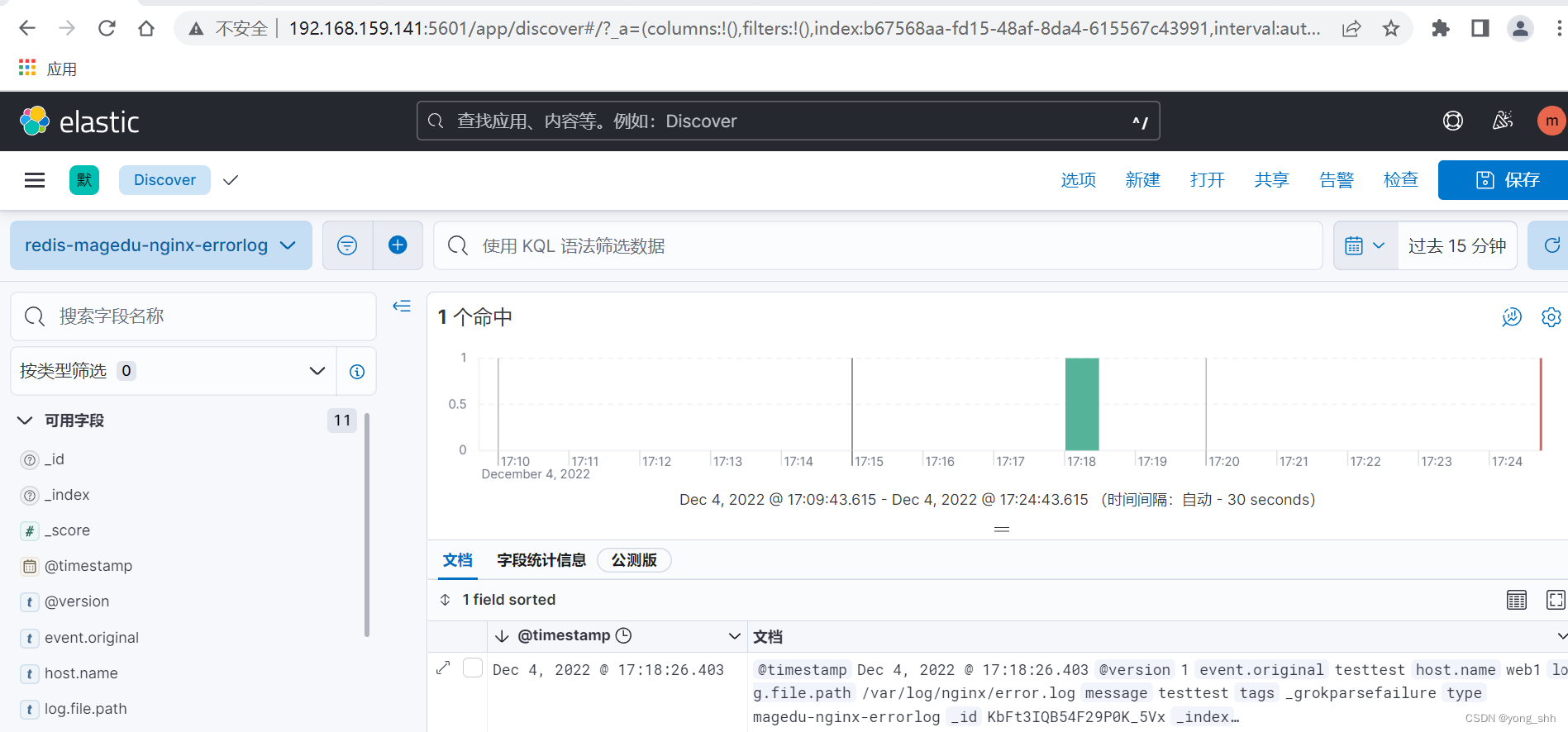

访问es,查看是否有创建索引 “redis-magedu-nginx-errorlog,redis-magedu-nginx-accesslog”

登录kibana,创建视图,查看日志,正常。

六. 基于docker-compose部署单机版本ELK

安装docker:

root@logstash1:/app# tar xvf docker-20.10.19-binary-install.tar.gz

root@logstash1:/app# bash docker-install.sh

基于docker-compose部署ELK:

# git clone https://gitee.com/jiege-gitee/elk-docker-compose.git

# cd docker-elk-compose

# docker-compose up -d elasticsearch #运行elasticsearch容器

# docker exec -it elasticsearch /usr/share/elasticsearch/bin/elasticsearch-setup-passwords interactive #设置账户密码magedu123

修改kibana连接elasticsearch的账户密码:

# vim kibana/config/kibana.yml

修改logstash连接elasticsearch的账户密码

# vim logstash/config/logstash.conf

修改Logstash输入输出规则

# vim logstash/config/logstash.conf

root@logstash1:/apps/elk-docker-compose# cat kibana/config/kibana.yml

server.name: kibana

server.host: “0”

elasticsearch.hosts: [ “http://192.168.159.145:9200” ]

elasticsearch.username: “kibana_system”

elasticsearch.password: “magedu123”

xpack.monitoring.ui.container.elasticsearch.enabled: true

i18n.locale: “zh-CN”

root@logstash1:/apps/elk-docker-compose# cat logstash/config/logstash.yml

http.host: “0.0.0.0”

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: logstash_system

xpack.monitoring.elasticsearch.password: “magedu123”

xpack.monitoring.elasticsearch.hosts: [ “http://elasticsearch:9200” ]

root@logstash1:/apps/elk-docker-compose# cat logstash/config/logstash.conf

input {

beats {

codec => “json”

port => 5044

type => “beats-log”

}

tcp {

port => 9889

type => “magedu-tcplog”

mode => “server”

}

}

output {

if [type] == “beats-log” {

elasticsearch {

hosts => [“192.168.159.145:9200”]

index => “%{type}-%{+YYYY.MM.dd}”

user => “elastic”

password => “magedu123”

}}

if [type] == “magedu-tcplog” {

elasticsearch {

hosts => [“192.168.159.145:9200”]

index => “%{type}-%{+YYYY.MM.dd}”

user => “elastic”

password => “magedu123”

}}

}

启动服务: docker-compose up -d

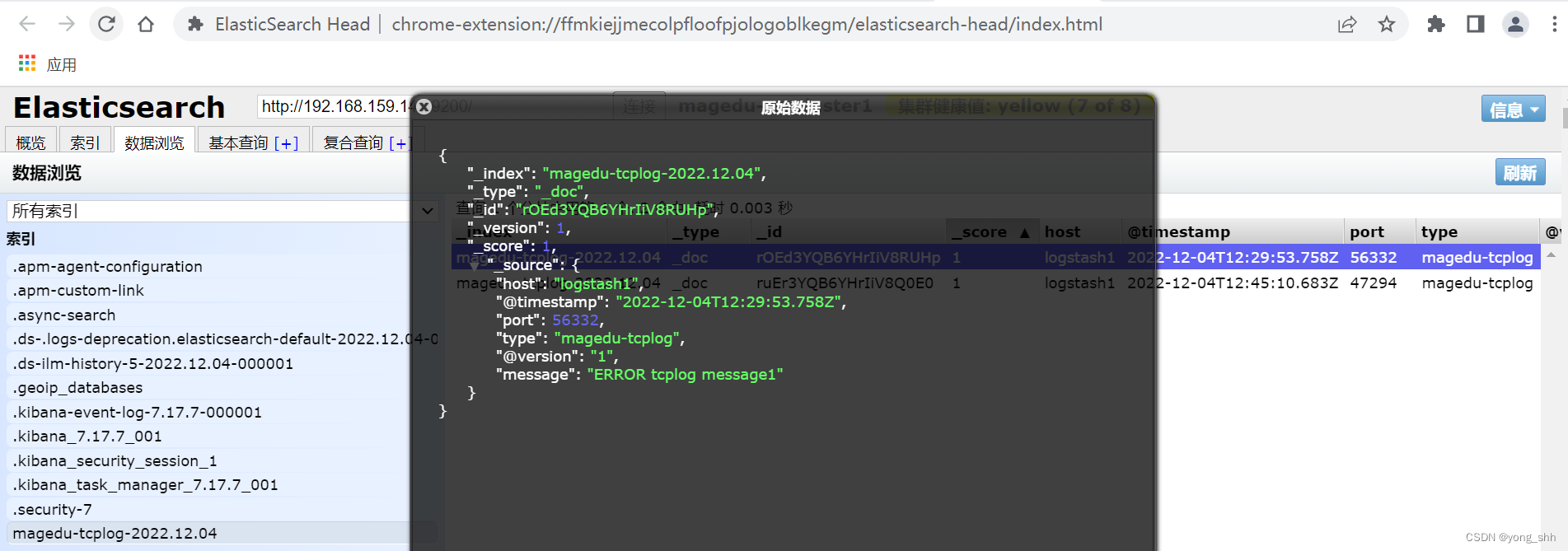

root@logstash1:/apps/elk-docker-compose# echo “ERROR tcplog message1” > /dev/tcp/192.168.159.145/9889

登录ES,查看是否创建索引magedu-tcplog,正常创建

登录kibana,创建magedu-tcplog视图,正常显示日志