原理

1.1 Bag-of-words原理简介

Bag Of Words(词袋)模型,是现在一种用于图像检索的一种方法。它最早用于对于文章内容的检索,原理是将文本看作是单词的集合,不考虑文档内的词的顺序关系和语法等。通过建立词典,对每个单词出现次数进行统计,以便得到文本内容的分类。

1.2 Bag-of-features原理简介

计算机视觉的专家将Bag-of-words方法应用于图像的检索中就有了Bag-of-features。

和Bag-of-words原理相似,若将文档对应一幅图像的话,那么文档内的词就是一个图像块的特征向量。一篇文档有若干个词构成,同样的,一幅图像由若干个图像块构成,而特征向量是图像块的一种表达方式。我们求得N幅图像中的若干个图像块的特征向量,然后用k-means算法把它们聚成k类,相当于我们的词袋里就有k个词,然后来了一幅图像,看它包含哪些词,若包含单词A,就把单词A的频数加1。

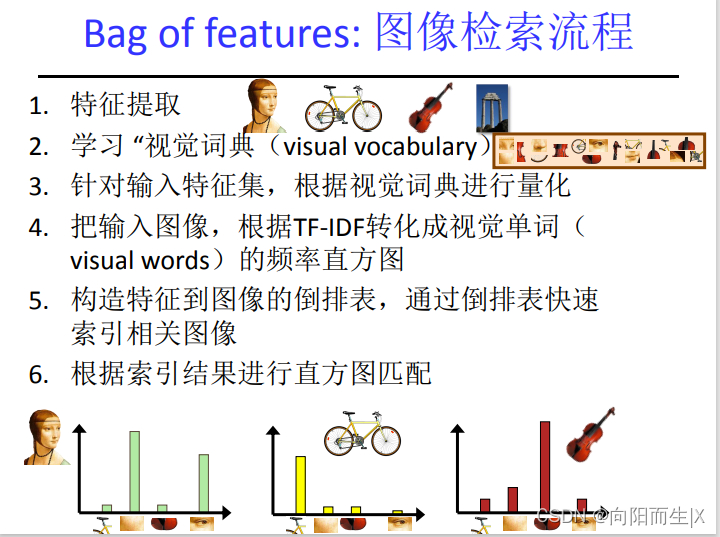

1.3 Bag-of-features算法

特征提取

学习 “视觉词典(visual vocabulary)”

针对输入图片对应的特征集,根据视觉词典进行量化

把输入图像,根据TF-IDF转化成视觉单词(visual words)的频率直方图

构造特征到图像的倒排表,通过倒排表快速索引相关图像

根据索引结果进行直方图匹配

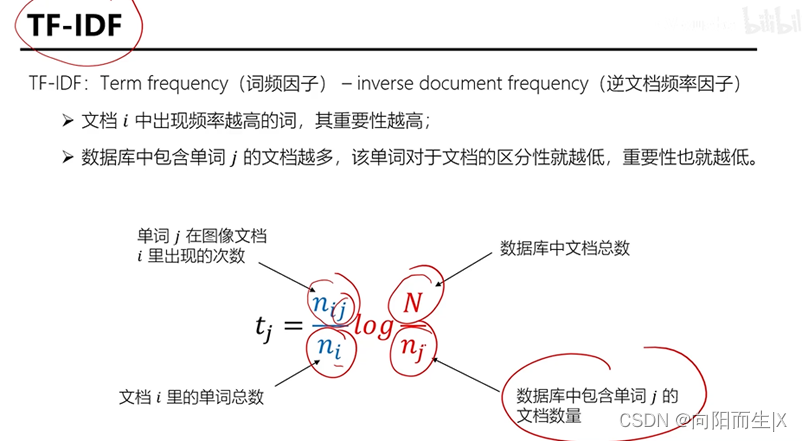

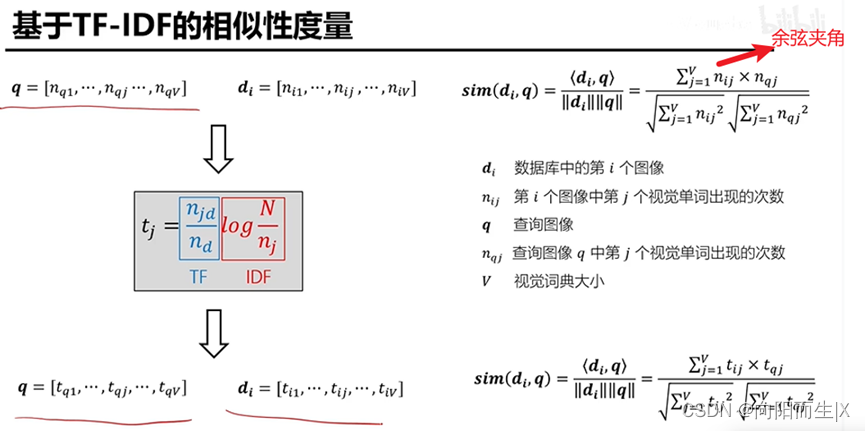

TF-IDF理解

代码实践

数据集准备

特征提取,生成特征词典

import pickle

from pcv.imagesearch import vocabulary

from pcv.tools.imtools import get_imlist

from pcv.localdescriptors import sift

#获取图像列表

imlist = get_imlist('WYS/')

nbr_images = len(imlist)

#获取特征列表

featlist = [imlist[i][:-3]+'sift' for i in range(nbr_images)]

#提取文件夹下图像的sift特征

for i in range(nbr_images):

sift.process_image(imlist[i], featlist[i])

#生成词汇

voc = vocabulary.Vocabulary('ukbenchtest')

voc.train(featlist, 1000, 10)

#保存词汇

with open('WYS/vocabulary.pkl', 'wb') as f:

pickle.dump(voc, f)

print ('vocabulary is:', voc.name, voc.nbr_words)

对输入特征集量化

import pickle

from pcv.imagesearch import imagesearch

from pcv.localdescriptors import sift

import sqlite3

from pcv.tools.imtools import get_imlist

# 获取图像列表

imlist = get_imlist(r'WYS')

nbr_images = len(imlist)

# 获取特征列表

featlist = [imlist[i][:-3] + 'sift' for i in range(nbr_images)]

# 载入词汇

with open(r'WYS\vocabulary.pkl', 'rb') as f:

voc = pickle.load(f)

# 创建索引

indx = imagesearch.Indexer('testImaAdd.db', voc)

indx.create_tables()

# 遍历所有的图像,并将它们的特征投影到词汇上

for i in range(nbr_images)[:120]:

locs, descr = sift.read_features_from_file(featlist[i])

indx.add_to_index(imlist[i], descr)

# 提交到数据库

indx.db_commit()

con = sqlite3.connect('testImaAdd.db')

print(con.execute('select count (filename) from imlist').fetchone())

print(con.execute('select * from imlist').fetchone())

建立图像索引进行图像检索

import pickle

from pcv.imagesearch import imagesearch

from pcv.geometry import homography

from pcv.tools.imtools import get_imlist

from pcv.localdescriptors import sift

import warnings

warnings.filterwarnings("ignore")

# load image list and vocabulary

# 载入图像列表

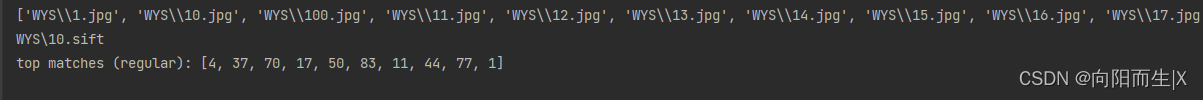

imlist = get_imlist(r'WYS')

print(imlist)

nbr_images = len(imlist)

# 载入特征列表

print(imlist[1][:-3] + 'sift')

featlist = [imlist[i][:-3] + 'sift' for i in range(nbr_images)]

# 载入词汇

with open(r'WYS\vocabulary.pkl', 'rb') as f:

voc = pickle.load(f, encoding='iso-8859-1')

src = imagesearch.Searcher('testImaAdd.db', voc) # Searcher类读入图像的单词直方图执行查询

# index of query image and number of results to return

# 查询图像索引和查询返回的图像数

q_ind = 3

nbr_results = 10

# regular query

# 常规查询(按欧式距离对结果排序)

res_reg = [w[1] for w in src.query(imlist[q_ind])[:nbr_results]] # 查询的结果

print('top matches (regular):', res_reg)

# load image features for query image

# 载入查询图像特征进行匹配

q_locs, q_descr = sift.read_features_from_file(featlist[q_ind])

fp = homography.make_homog(q_locs[:, :2].T)

# RANSAC model for homography fitting

# 用单应性进行拟合建立RANSAC模型

model = homography.RansacModel()

rank = {}

# load image features for result

# 载入候选图像的特征

for ndx in res_reg[1:]:

locs, descr = sift.read_features_from_file(featlist[ndx]) # because 'ndx' is a rowid of the DB that starts at 1

# get matches

matches = sift.match(q_descr, descr)

ind = matches.nonzero()[0]

ind2 = matches[ind]

tp = homography.make_homog(locs[:, :2].T)

# compute homography, count inliers. if not enough matches return empty list

# 计算单应性矩阵

try:

H, inliers = homography.H_from_ransac(fp[:, ind], tp[:, ind2], model, match_theshold=4)

except:

inliers = []

# store inlier count

rank[ndx] = len(inliers)

# sort dictionary to get the most inliers first

# 对字典进行排序,可以得到重排之后的查询结果

sorted_rank = sorted(rank.items(), key=lambda t: t[1], reverse=True)

res_geom = [res_reg[0]] + [s[0] for s in sorted_rank]

print('top matches (homography):', res_geom)

# 显示查询结果

imagesearch.plot_results(src, res_reg[:6]) # 常规查询

imagesearch.plot_results(src, res_geom[:6]) # 重排后的结果

版权声明:本文为m0_50945459原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。